Guidance on Generative AI Use in the Workplace

Generative AI is the most rapidly adopted technology in human history. It has the potential to completely upend the nature of work, from how we communicate, code, and think. The foundational models that drive this technology are as vast and opaque as the network of neurons that make up human consciousness itself. Some of the best generative AI (like ChatGPT) are incredibly cheap and easy to use.

For some reason, corporate leadership is apprehensive about this.

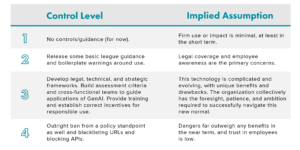

As leaders get a sense that GenAI – especially Large Language Models aren’t going away, they want to both protect their companies and identify opportunities to benefit. Below is a table describing the four levels of guidance/controls companies implement on generative AI use in the workplace and what each level implies about leadership’s view of this technology.

If it’s unclear, the correct answer to this multiple-choice question is number 3. Below, we’ll get into why this level of attention is necessary and what that effort looks like as far as enterprise initiatives.

The Risks

To understand what the scope of guidance needs to cover, it can be helpful to separate the risks into two categories – those associated with entering information (often in form of “prompting” LLMs or “context embeddings”) and risks associated with outputs (the “generative” part of GenAI):

- Input Risks: The general risk here is that the information entered into LLMs is privileged in some way. Employees feed in IP, confidential information, or other sensitive data that companies don’t want to lose control of. Here, there can be repercussions from a regulatory standpoint or compliance risk. Additionally, there can be issues from an information security standpoint or contract issues on how data is shared with third parties. The exposure depends on policies from the third-party LLM provider regarding how data is stored and shared.

- Output risks:These are more pernicious, and they generally arise from using “bad” outputs. They can be “bad” because they are factually incorrect (the word “hallucinations” is surely seeing peak use). Outputs can also be technically correct while lacking the context to provide the best response – think of implementing misguided code, which produces no errors but misinterprets semantic information about data.

Outputs can be biased, too. LLMs are trained using internet data, which can be biased at times (if you can believe it), and foundational models are usually then fed through “Reinforcement Learning from Human Feedback” (RLHF). This architecture is oft-depicted as a monstrous behemoth wearing a smiley-face mask. This bias can lead to not just poor results but also more legal risks – think of discrimination in job descriptions or performance reviews.

The bias and misinformation that creeps into outputs used are hardest to detect, meaning that any problems they cause can perpetuate unnoticed. Additionally, privileged information from other firms that the model was trained on can appear in outputs, potentially threatening legal exposure for the company.

Professional Use

Most of today’s GenAI use in organizations is what we would call “professional use.” Individuals use ChatGPT to be better at their jobs. They use it for research, to develop or synthesize ideas, and to write or edit emails, documentation, or code. Employees are finding infinite uses that help them become more efficient and impactful. AI is their knowledgeable, versatile, and free intern – one who is good at coding in every language, knows how to navigate sub-menus in any software, and performs countless iterations on a problem until the human employee is satisfied.

Split Policy and Guidance

Companies, aware of input and output risks for this type of use, rush to share guidance. They make the mistake of smashing together legal requirements with other AI best practices.

There’s a correct order to generative AI guidance, but because the technology is moving so rapidly, this guidance comes all at once. The old advice of “not sharing information which should not be shared” still applies. The same set of guidance applies to online PDF-to-Word converters or any third party that hasn’t been appropriately vetted. The legal frameworks still apply, and they still come first. Of course, leaders need to coordinate with their legal departments to set these frameworks.

Incentivize Adherence

Once companies establish legal guidance and company policies, they need to shift to assuring employee adherence. Folks may not adhere to guidance if they benefit from use, and they don’t want to be told to stop. Leaders need to design incentives such that employees do not keep GenAI use hidden. GenAI super-users are already thinking about these incentives. Will they be reprimanded, or made to await corporate review? If an employee identifies ways to increase job efficiency, will that discovery put their job at risk, or will they be rewarded? Will any revelation of AI assistance on engineering or development invite increased scrutiny or QA requirements?

There’s evidence that the use of assistive technology most improves the performance of the least productive employees. The most boring and repetitive work is the first to be automated, leading to higher employee satisfaction. These are outcomes that all companies want, but incentive structures are not set up to encourage them. There is a counterproductive element of fear when the first and only wave of guidance is legal. A firm-wide culture of trust goes a long way here: for individual teams, interdepartmentally, and with leadership. The secret employees who are best at using AI need to be found and nurtured – the risk of losing out on their innovations is too great.

Building Good Habits

For those not using it yet, companies can establish good employee habits from the start. You don’t need to take a course on prompt engineering to become a good prompt writer. Most people’s prompts are shockingly bad. They’re too simple, have no context, and aren’t iterative. Materials/training abound on guidance for prompting both on an individual level and for productionized solutions. Companies can pair this training – which can be highly engaging – with the best practices necessary to avoid the input/output risks. They can then practice in a safe manner.

Enterprise Use

Professional use may persist as the dominant way GenAI is used in companies. Generative AI is not currently well-suited to talk to other systems. IT architecture is built on the necessity of consistent inputs and outputs. That assumption of reliability is a challenging one when thinking about generative AI. However, as the technology matures, it will become more integrated into the processes and systems in the workplace. The good news is companies have much more transparency into initiatives at the project level, since they require funding, resources, and get onto team status updates. The best middle management will be well-equipped to prepare pitches for leadership, and the best leadership will listen.

Enable Early Wins

Leadership needs to first enable financial and technical investment in proof-of-concept use cases. A promising innovation for the company may be covertly built in unsafe environments simply because the employee doesn’t have access to or awareness of sandbox space available to them. After proof-of-concept, companies may move into applications or vendors with limited exposure. An example would be a periodic data dump. This is a good step because it limits exposure, but it also doesn’t advance internal capabilities.

Cut Through Noise of Shiny Toys

Being a technology leader will require an entire rethinking around which technology will benefit. There are far too many proposals around using GenAI as a magic box to join information across disparate sources – things that are data architecture problems. We’re not used to transformative technology having its best applications in more creative places, like data visualization or information synthesis. The implications can go all the way to the operating model. Take Tableau GPT, which could change the nature of operational analysts (in supply chain/finance/sales), and visualization is just one area. There’s an increasing amount of technology with embedded LLMs in unexpected places. When software like SAP is integrating LLMs, it indicates there is opportunity for use at the enterprise-level.

Vendor Evaluation Fundamentals

Evaluate technologies on a case-by-case basis. There are no hard and fast rules for vendor selection regardless of industry or application. However, the general buckets of evaluation criteria apply – how well does this technology meet functional requirements? Does this vendor have a trustworthy track record? What are the costs? Risks? How will the data be protected? On an organizational level, evaluate what makes sense to build in-house, again repurposing similar criteria tech-leaders are familiar with when making these decisions. Opacity isn’t a risk since it’s an evident part of neural networks that underpin many of these foundational models. That opacity will only increase with external solution providers and will be a huge consideration where interpretability is valued.

Model choice also matters. There’s way more than OpenAI/Chat GPT. Other behemoth general purpose models from NVIDIA, Google, Meta, Anthropic, and others each provide their own benefits. Some models can be run/hosted locally, which can mitigate some risk. There are also domain-specific models, which even in their infancy are showing promise in applications across healthcare and consumer products. These may prove to be the dominant form of enterprise AI and may continue to grow as a proportion of the IT landscape. Early adopters may build long-term advantages.

Progress with Confidence

The investment required to have managed, productive use of generative AI is substantial. It will pay off. Leadership first needs to build technical and legal controls that are purpose built for their company. Then, they can build upon good foundational processes for value-based AI use with the great people they have hired.

Clarkston helps companies navigate building a culture of impactful and safe use of generative AI, both at the professional and enterprise level. If you would like to chat about how your company can take the next step, reach out to us today.